After its earnings release on May 24, the Santa Clara-based graphics chip maker NVIDIA Corporation (NVDA) stole the thunder by becoming the first semiconductor company to hit a valuation of $1 trillion.

NVDA has also blown away Street expectations ahead of its quarterly earnings release on August 23, with profits for the current quarter expected to be at least 50% higher than analyst estimates and the momentum expected to continue in the foreseeable future.

On the other hand, since its humble beginnings as a supplier for Intel Corporation (INTC), Advanced Micro Devices, Inc. (AMD) has come a long way. During its earnings release for the second quarter, despite persistent weakness in the PC market, the company’s result topped analyst estimates.

While NVDA has carved its niche and cornered a significant share of the GPU domain through advancements in parallel (and consequently accelerated) computing which began back in 2006 with the release of a software toolkit called CUDA, Chair and CEO Dr. Lisa Su is widely credited with AMD’s turnaround and transition from being widely dismissed due to performance issues and delayed releases to being the only company in the world to design both CPUs and GPUs at scale.

The New (Perhaps Only) Game in Town

As a general-purpose technology, such as the steam engine and electricity, Artificial Intelligence (AI) that has already been touching and influencing all facets of our life, including how we shop, drive, date, entertain ourselves, manage our finances, take care of our health, and much more.

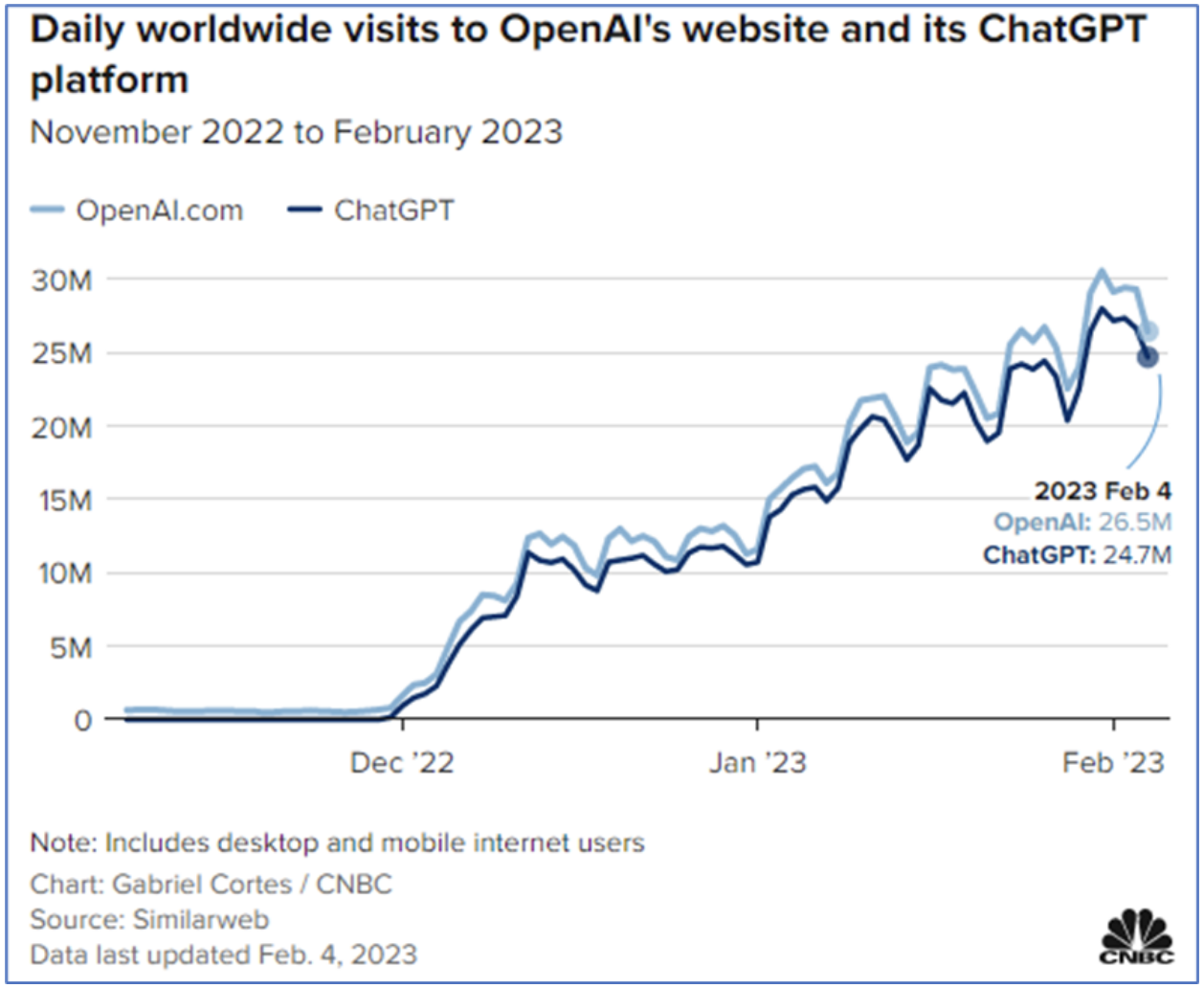

However, late in November of last year, when OpenAI opened its artificial intelligence chatbot, ChatGPT, to the general public, all hell broke loose. The application took the world by storm. It amassed 1 million users in five days and 100 million monthly active users only two months into its launch to become the fastest-growing application in history.

The generative AI-powered application’s capability to provide (surprisingly) human-like responses to user requests equally fascinated and concerned individuals, businesses, and institutions with the possibilities of the technology. A large language model or LLM powers ChatGPT. This gives the application the ability to understand human language and provide responses based on the large body of information on which the model has been trained.

NVDA is reaping the rewards for all that invisible work done in the field of parallel computing. Parallel computing was ideal for artificial neural networks' deep (machine) learning. As a result of that head start in the AI tech race, its A100 chips, which are powering LLMs like ChatGPT, have become indispensable for Silicon Valley tech giants.

To put things into context, the supercomputer behind OpenAI’s ChatGPT needed 10,000 of NVDA’s famous chips. With each chip costing $10,000, a single algorithm that’s fast becoming ubiquitous is powered by semiconductors worth $100 million.

However, AMD isn’t too far behind either. According to Dr. Su, Data Center is the most strategic piece of business as far as high-performance computing is concerned. AMD underscored this commitment with the recent acquisition of data center optimization startup Pensando for $1.9 billion.

At the premiere, AMD’s ambitions to capitalize on the AI boom were loud and clear, with the launch of MI300X (a GPU-only chip) as a direct competitor to NVDA’s H100. The chip includes 8 GPUs (5nm GPUs with 6nm I/O) with 192GB of HBM3 and 5.2TB/s of memory bandwidth.

AMD believes this will allow LLMs’ inference workloads that require substantial memory to be run using fewer GPUs, which could improve the TCO (Total Cost of Ownership) compared to the H100.

The Road Ahead

The optimism surrounding both companies is justified.

With NVDA’s presence in data centers, cloud computing, and AI, its chips are making their way into self-driving cars, engines that enable the creation of digital twins with omniverse that could be used to run simulations and train AI algorithms for various applications.

On the other hand, AMD has also been training its guns to exploit the burgeoning AI accelerator market, projected to be over $30 billion in 2023 and potentially exceed $150 billion in 2027.

AMD is one of the few companies making high-end GPUs needed for artificial intelligence. With AI being seen as a tailwind that could drive PC sales, the company announced plans to launch new Radeon 7000 desktop GPUs at its quarterly earnings release. It is being speculated that the GPU will come with two 8-pin PCIe power connectors and four video out ports, including three DisplayPort 2.1 and one HDMI 2.1.

Caveats

AMD existed as both a chip designer and manufacturer, at least until 2009. However, significant capex requirements associated with manufacturing, amid financial troubles in the wake of the Great Recession, compelled the company to demerge and spin off its fab to form GlobalFoundries Inc. (GFS), which has been focused on manufacturing low-end chips ever since.

Today, both NVDA and AMD operate as fabless chip companies. Hence, both companies face risks of backward integration by companies such as Apple Inc. (AAPL), Amazon.com, Inc. (AMZN), and Tesla Inc. (TSLA) with the wherewithal to develop the intellectual capital to design their own chips.

Moreover, almost all of the manufacturing has been outsourced to Taiwan Semiconductor Manufacturing Company Ltd. (TSM), which has yet to diversify significantly outside Taiwan and has become the bone of contention between the two leading superpowers.

With geopolitical risk being the potential Achilles heel for both companies, their efforts toward geographical diversification also receive much-needed political encouragement through the Chips and Science Act.

Dr. Su, who also serves on President Biden’s council of advisors on science and technology, pushed hard for the passage of the Act. It is aimed at on-shoring and de-risking semiconductor manufacturing in the interest of national security by setting aside $52 billion to incentivize companies to manufacture semiconductors domestically.

Bottom Line

Given its massive importance and cornucopia of applications, it’s hardly surprising that Zion Market Research forecasts the global AI industry to grow to $422.37 billion by 2028. Hence, this field has understandably garnered massive attention from investors who are reluctant to miss the bus on such a watershed development in the history of humankind.

Hence, in view of product diversification, increasing traction in the GPU segment, and relatively higher valuation comfort, investors in AMD could benefit from more sustained upside potential compared to NVDA.